The fourth quarter

Competing risks in the Stata News

Including covariates in crossed-effects models

The manual entry for xtmixed documents all the official features in the command, and several applications. However, it would be impossible to address all the models that can be fitted with this command in a manual entry. I want to show you how to include covariates in a crossed-effects model.

Let me start by reviewing the crossed-effects notation for xtmixed. I will use the homework dataset from Kreft and de Leeuw (1998) (a subsample from the National Education Longitudinal Study of 1988). You can download the dataset from the webpage for Rabe-Hesketh & Skrondal (2008) (http://www.stata-press.com/data/mlmus2.html), and run all the examples in this entry.

If we want to fit a model with variable math (math grade) as outcome, and two crossed effects: variable region and variable urban, the standard syntax would be:

(1) xtmixed math ||_all:R.region || _all: R.urban

The underlying model for this syntax is

math_ijk = b + u_i + v_j + eps_ijk

where i represents the region and j represents the level of variable urban, u_i are i.i.d, v_j are i.i.d, and eps_ijk are i.i.d, and all of them are independent from each other.

The standard notation for xtmixed assumes that levels are always nested. In order to fit non-nested models, we create an artificial level with only one category consisting of all the observations; in addition, we use the notation R.var, which indicates that we are including dummies for each category of variable var, while constraining the variances to be the same.

That is, if we write

xtmixed math ||_all:R.region

we are just fitting the model:

xtmixed math || region:

but we are doing it in a very inefficient way. What we are doing is exactly the following:

generate one = 1 tab region, gen(id_reg) xtmixed math || one: id_reg*, cov(identity) nocons

That is, instead of estimating one variance parameter, we are estimating four, and constraining them to be equal. Therefore, a more efficient way to fit our mixed model (1), would be:

xtmixed math ||_all:R.region || urban:

This will work because urban is nested in one. Therefore, if we want to include a covariate (also known as random slope) in one of the levels, we just need to place that level at the end and use the usual syntax for random slope, for example:

xtmixed math public || _all:R.region || urban: public

Now let’s assume that we want to include random coefficients in both levels; how would we do that? The trick is to use the _all notation to include a random coefficient in the model. For example, if we want to fit

(2) xtmixed math meanses || region: meanses

we are assuming that variable meanses (mean SES per school) has a different effect (random slope) for each region. This model can be expressed as

math_ik = x_ik*b + sigma_i + alpha_i*meanses_ik

where sigma_i are i.i.d, alpha_i are i.i.d, and sigmas and alphas are independent from each other. This model can be fitted by generating all the interactions of meanses with the regions, including a random alpha_i for each interaction, and restricting their variances to be equal. In other words, we can fit model (2) also as follows:

unab idvar: id_reg*

foreach v of local idvar{

gen inter`v' = meanses*`v'

}

xtmixed math meanses ///

|| _all:inter*, cov(identity) nocons ///

|| _all: R.region

Finally, we can use all these tools to include random coefficients in both levels, for example:

xtmixed math parented meanses public || _all: R.region || /// _all:inter*, cov(identity) nocons || urban: public

References:

Kreft, I.G.G and de J. Leeuw. 1998. Introducing Multilevel Modeling. Sage.

Rabe-Hesketh, S. and A. Skrondal. 2008. Multilevel and Longitudinal Modeling Using Stata, Second Edition. Stata Press

Positive log-likelihood values happen

From time to time, we get a question from a user puzzled about getting a positive log likelihood for a certain estimation. We get so used to seeing negative log-likelihood values all the time that we may wonder what caused them to be positive.

First, let me point out that there is nothing wrong with a positive log likelihood.

The likelihood is the product of the density evaluated at the observations. Usually, the density takes values that are smaller than one, so its logarithm will be negative. However, this is not true for every distribution.

For example, let’s think of the density of a normal distribution with a small standard deviation, let’s say 0.1.

. di normalden(0,0,.1) 3.9894228

This density will concentrate a large area around zero, and therefore will take large values around this point. Naturally, the logarithm of this value will be positive.

. di log(3.9894228) 1.3836466

In model estimation, the situation is a bit more complex. When you fit a model to a dataset, the log likelihood will be evaluated at every observation. Some of these evaluations may turn out to be positive, and some may turn out to be negative. The sum of all of them is reported. Let me show you an example.

I will start by simulating a dataset appropriate for a linear model.

clear program drop _all set seed 1357 set obs 100 gen x1 = rnormal() gen x2 = rnormal() gen y = 2*x1 + 3*x2 +1 + .06*rnormal()

I will borrow the code for mynormal_lf from the book Maximum Likelihood Estimation with Stata (W. Gould, J. Pitblado, and B. Poi, 2010, Stata Press) in order to fit my model via maximum likelihood.

program mynormal_lf

version 11.1

args lnf mu lnsigma

quietly replace `lnf' = ln(normalden($ML_y1,`mu',exp(`lnsigma')))

end

ml model lf mynormal_lf (y = x1 x2) (lnsigma:)

ml max, nolog

The following table will be displayed:

. ml max, nolog

Number of obs = 100

Wald chi2(2) = 456919.97

Log likelihood = 152.37127 Prob > chi2 = 0.0000

------------------------------------------------------------------------------

y | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

eq1 |

x1 | 1.995834 .005117 390.04 0.000 1.985805 2.005863

x2 | 3.014579 .0059332 508.08 0.000 3.00295 3.026208

_cons | .9990202 .0052961 188.63 0.000 .98864 1.0094

-------------+----------------------------------------------------------------

lnsigma |

_cons | -2.942651 .0707107 -41.62 0.000 -3.081242 -2.804061

------------------------------------------------------------------------------

We can see that the estimates are close enough to our original parameters, and also that the log likelihood is positive.

We can obtain the log likelihood for each observation by substituting the estimates in the log-likelihood formula:

. predict double xb

. gen double lnf = ln(normalden(y, xb, exp([lnsigma]_b[_cons])))

. summ lnf, detail

lnf

-------------------------------------------------------------

Percentiles Smallest

1% -1.360689 -1.574499

5% -.0729971 -1.14688

10% .4198644 -.3653152 Obs 100

25% 1.327405 -.2917259 Sum of Wgt. 100

50% 1.868804 Mean 1.523713

Largest Std. Dev. .7287953

75% 1.995713 2.023528

90% 2.016385 2.023544 Variance .5311426

95% 2.021751 2.023676 Skewness -2.035996

99% 2.023691 2.023706 Kurtosis 7.114586

. di r(sum)

152.37127

. gen f = exp(lnf)

. summ f, detail

f

-------------------------------------------------------------

Percentiles Smallest

1% .2623688 .2071112

5% .9296673 .3176263

10% 1.52623 .6939778 Obs 100

25% 3.771652 .7469733 Sum of Wgt. 100

50% 6.480548 Mean 5.448205

Largest Std. Dev. 2.266741

75% 7.357449 7.564968

90% 7.51112 7.56509 Variance 5.138117

95% 7.551539 7.566087 Skewness -.8968159

99% 7.566199 7.56631 Kurtosis 2.431257

We can see that some values for the log likelihood are negative, but most are positive, and that the sum is the value we already know. In the same way, most of the values of the likelihood are greater than one.

As an exercise, try the commands above with a bigger variance, say, 1. Now the density will be flatter, and there will be no values greater than one.

In short, if you have a positive log likelihood, there is nothing wrong with that, but if you check your dispersion parameters, you will find they are small.

Use poisson rather than regress; tell a friend

Do you ever fit regressions of the form

ln(yj) = b0 + b1x1j + b2x2j + … + bkxkj + εj

by typing

. generate lny = ln(y)

. regress lny x1 x2 … xk

The above is just an ordinary linear regression except that ln(y) appears on the left-hand side in place of y.

The next time you need to fit such a model, rather than fitting a regression on ln(y), consider typing

. poisson y x1 x2 … xk, vce(robust)

which is to say, fit instead a model of the form

yj = exp(b0 + b1x1j + b2x2j + … + bkxkj + εj)

Wait, you are probably thinking. Poisson regression assumes the variance is equal to the mean,

E(yj) = Var(yj) = exp(b0 + b1x1j + b2x2j + … + bkxkj)

whereas linear regression merely assumes E(ln(yj)) = b0 + b1x1j + b2x2j + … + bkxkj and places no constraint on the variance. Actually regression does assume the variance is constant but since we are working the logs, that amounts to assuming that Var(yj) is proportional to yj, which is reasonable in many cases and can be relaxed if you specify vce(robust).

In any case, in a Poisson process, the mean is equal to the variance. If your goal is to fit something like a Mincer earnings model,

ln(incomej) = b0 + b1*educationj + b2*experiencej + b3*experiencej2 + εj

there is simply no reason to think that the the variance of the log of income is equal to its mean. If a person has an expected income of $45,000, there is no reason to think that the variance around that mean is 45,000, which is to say, the standard deviation is $212.13. Indeed, it would be absurd to think one could predict income so accurately based solely on years of schooling and job experience.

Nonetheless, I suggest you fit this model using Poisson regression rather than linear regression. It turns out that the estimated coefficients of the maximum-likelihood Poisson estimator in no way depend on the assumption that E(yj) = Var(yj), so even if the assumption is violated, the estimates of the coefficients b0, b1, …, bk are unaffected. In the maximum-likelihood estimator for Poisson, what does depend on the assumption that E(yj) = Var(yj) are the estimated standard errors of the coefficients b0, b1, …, bk. If the E(yj) = Var(yj) assumption is violated, the reported standard errors are useless. I did not suggest, however, that you type

. poisson y x1 x2 … xk

I suggested that you type

. poisson y x1 x2 … xk, vce(robust)

That is, I suggested that you specify that the variance-covariance matrix of the estimates (of which the standard errors are the square root of the diagonal) be estimated using the Huber/White/Sandwich linearized estimator. That estimator of the variance-covariance matrix does not assume E(yj) = Var(yj), nor does it even require that Var(yj) be constant across j. Thus, Poisson regression with the Huber/White/Sandwich linearized estimator of variance is a permissible alternative to log linear regression — which I am about to show you — and then I’m going to tell you why it’s better.

I have created simulated data in which

yj = exp(8.5172 + 0.06*educj + 0.1*expj – 0.002*expj2 + εj)

where εj is distributed normal with mean 0 and variance 1.083 (standard deviation 1.041). Here’s the result of estimation using regress:

. regress lny educ exp exp2

Source | SS df MS Number of obs = 5000

-------------+------------------------------ F( 3, 4996) = 44.72

Model | 141.437342 3 47.1457806 Prob > F = 0.0000

Residual | 5267.33405 4996 1.05431026 R-squared = 0.0261

-------------+------------------------------ Adj R-squared = 0.0256

Total | 5408.77139 4999 1.08197067 Root MSE = 1.0268

------------------------------------------------------------------------------

lny | Coef. Std. Err. t P>|t| [95% Conf. Interval]

-------------+----------------------------------------------------------------

educ | .0716126 .0099511 7.20 0.000 .052104 .0911212

exp | .1091811 .0129334 8.44 0.000 .0838261 .1345362

exp2 | -.0022044 .0002893 -7.62 0.000 -.0027716 -.0016373

_cons | 8.272475 .1855614 44.58 0.000 7.908693 8.636257

------------------------------------------------------------------------------

I intentionally created these data to produce a low R-squared.

We obtained the following results:

truth est. S.E.

----------------------------------

educ 0.0600 0.0716 0.0100

exp 0.1000 0.1092 0.0129

exp2 -0.0020 -0.0022 0.0003

-----------------------------------

_cons 8.5172 8.2725 0.1856 <- unadjusted (1)

9.0587 8.7959 ? <- adjusted (2)

-----------------------------------

(1) To be used for predicting E(ln(yj))

(2) To be used for predicting E(yj)

Note that the estimated coefficients are quite close to the true values. Ordinarily, we would not know the true values, except I created this artificial dataset and those are the values I used.

For the intercept, I list two values, so I need to explain. We estimated a linear regression of the form,

ln(yj) = b0 + Xjb + εj

As with all linear regressions,

E(ln(yj)) = E(b0 + Xjb + εj)

= b0 + Xjb + E(εj)

= b0 + Xjb

We, however, have no real interest in E(ln(yj)). We fit this log regression as a way of obtaining estimates of our real model, namely

yj = exp(b0 + Xjb + εj)

So rather than taking the expectation of ln(yj), lets take the expectation of yj:

E(yj) = E(exp(b0 + Xjb + εj))

= E(exp(b0 + Xjb) * exp(εj))

= exp(b0 + Xjb) * E(exp(εj))

E(exp(εj)) is not one. E(exp(εj)) for εj distributed N(0, σ2) is exp(σ2/2). We thus obtain

E(yj) = exp(b0 + Xjb) * exp(σ2/2)

People who fit log regressions know about this — or should — and know that to obtain predicted yj values, they must

- Obtain predicted values for ln(yj) = b0 + Xjb.

- Exponentiate the predicted log values.

- Multiply those exponentiated values by exp(σ2/2), where σ2 is the square of the root-mean-square-error (RMSE) of the regression.

They do in this in Stata by typing

. predict yhat

. replace yhat = exp(yhat).

. replace yhat = yhat*exp(e(rmse)^2/2)

In the table I that just showed you,

truth est. S.E.

----------------------------------

educ 0.0600 0.0716 0.0100

exp 0.1000 0.1092 0.0129

exp2 -0.0020 -0.0022 0.0003

-----------------------------------

_cons 8.5172 8.2725 0.1856 <- unadjusted (1)

9.0587 8.7959 ? <- adjusted (2)

-----------------------------------

(1) To be used for predicting E(ln(yj))

(2) To be used for predicting E(yj)

I’m setting us up to compare these estimates with those produced by poisson. When we estimate using poisson, we will not need to take logs because the Poisson model is stated in terms of yj, not ln(yj). In prepartion for that, I have included two lines for the intercept — 8.5172, which is the intercept reported by regress and is the one appropriate for making predictions of ln(y) — and 9.0587, an intercept appropriate for making predictions of y and equal to 8.5172 plus σ2/2. Poisson regression will estimate the 9.0587 result because Poisson is stated in terms of y rather than ln(y).

I placed a question mark in the column for the standard error of the adjusted intercept because, to calculate that, I would need to know the standard error of the estimated RMSE, and regress does not calculate that.

Let’s now look at the results that poisson with option vce(robust) reports. We must not forget to specify option vce(robust) because otherwise, in this model that violates the Poisson assumption that E(yj) = Var(yj), we would obtain incorrect standard errors.

. poisson y educ exp exp2, vce(robust)

note: you are responsible for interpretation of noncount dep. variable

Iteration 0: log pseudolikelihood = -1.484e+08

Iteration 1: log pseudolikelihood = -1.484e+08

Iteration 2: log pseudolikelihood = -1.484e+08

Poisson regression Number of obs = 5000

Wald chi2(3) = 67.52

Prob > chi2 = 0.0000

Log pseudolikelihood = -1.484e+08 Pseudo R2 = 0.0183

------------------------------------------------------------------------------

| Robust

y | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

educ | .0575636 .0127996 4.50 0.000 .0324769 .0826504

exp | .1074603 .0163766 6.56 0.000 .0753628 .1395578

exp2 | -.0022204 .0003604 -6.16 0.000 -.0029267 -.0015141

_cons | 9.016428 .2359002 38.22 0.000 8.554072 9.478784

------------------------------------------------------------------------------

So now we can fill in the rest of our table:

regress poisson

truth est. S.E. est. S.E.

-----------------------------------------------------

educ 0.0600 0.0716 0.0100 0.0576 0.1280

exp 0.1000 0.1092 0.0129 0.1075 0.0164

exp2 -0.0020 -0.0022 0.0003 -0.0022 0.0003

------------------------------------------------------

_cons 8.5172 8.2725 0.1856 ? ? <- (1)

9.0587 8.7959 ? 9.0164 0.2359 <- (2)

------------------------------------------------------

(1) To be used for predicting E(ln(yj))

(2) To be used for predicting E(yj)

I told you that Poisson works, and in this case, it works well. I’ll now tell you that in all cases it works well, and it works better than log regression. You want to think about Poisson regression with the vce(robust) option as a better alternative to log regression.

How is Poisson better?

First off, Poisson handles outcomes that are zero. Log regression does not because ln(0) is -∞. You want to be careful about what it means to handle zeros, however. Poisson handles zeros that arise in correspondence to the model. In the Poisson model, everybody participates in the yj = exp(b0 + Xjb + εj) process. Poisson regression does not handle cases where some participate and others do not, and among those who do not, had they participated, would likely produce an outcome greater than zero. I would never suggest using Poisson regression to handle zeros in an earned income model because those that earned zero simply didn’t participate in the labor force. Had they participated, their earnings might have been low, but certainly they would have been greater than zero. Log linear regression does not handle that problem, either.

Natural zeros do arise in other situations, however, and a popular question on Statalist is whether one should recode those natural zeros as 0.01, 0.0001, or 0.0000001 to avoid the missing values when using log linear regression. The answer is that you should not recode at all; you should use Poisson regression with vce(robust).

Secondly, small nonzero values, however they arise, can be influential in log-linear regressions. 0.01, 0.0001, 0.0000001, and 0 may be close to each other, but in the logs they are -4.61, -9.21, -16.12, and -∞ and thus not close at all. Pretending that the values are close would be the same as pretending that that exp(4.61)=100, exp(9.21)=9,997, exp(16.12)=10,019,062, and exp(∞)=∞ are close to each other. Poisson regression understands that 0.01, 0.0001, 0.0000001, and 0 are indeed nearly equal.

Thirdly, when estimating with Poisson, you do not have to remember to apply the exp(σ2/2) multiplicative adjustment to transform results from ln(y) to y. I wrote earlier that people who fit log regressions of course remember to apply the adjustment, but the sad fact is that they do not.

Finally, I would like to tell you that everyone who estimates log models knows about the Poisson-regression alternative and it is only you who have been out to lunch. You, however, are in esteemed company. At the recent Stata Conference in Chicago, I asked a group of knowledgeable researchers a loaded question, to which the right answer was Poisson regression with option vce(robust), but they mostly got it wrong.

I said to them, “I have a process for which it is perfectly reasonable to assume that the mean of yj is given by exp(b0 + Xjb), but I have no reason to believe that E(yj) = Var(yj), which is to say, no reason to suspect that the process is Poisson. How would you suggest I estimate the model?” Certainly not using Poisson, they replied. Social scientists suggested I use log regression. Biostatisticians and health researchers suggested I use negative binomial regression even when I objected that the process was not the gamma mixture of Poissons that negative binomial regression assumes. “What else can you do?” they said and shrugged their collective shoulders. And of course, they just assumed over dispersion.

Based on those answers, I was ready to write this blog entry, but it turned out differently than I expected. I was going to slam negative binomial regression. Negative binomial regression makes assumptions about the variance, assumptions different from that made by Poisson, but assumptions nonetheless, and unlike the assumption made in Poisson, those assumptions do appear in the first-order conditions that determine the fitted coefficients that negative binomial regression reports. Not only would negative binomial’s standard errors be wrong — which vce(robust) could fix — but the coefficients would be biased, too, and vce(robust) would not fix that. I planned to run simulations showing this.

When I ran the simulations, I was surprised by the results. The negative binomial estimator (Stata’s nbreg) was remarkably robust to violations in variance assumptions as long as the data were overdispersed. In fact, negative binomial regression did about as well as Poisson regression. I did not run enough simulations to make generalizations, and theory tells me those generalizations have to favor Poisson, but the simulations suggested that if Poisson does do better, it’s not in the first four decimal places. I was impressed. And disappointed. It would have been a dynamite blog entry.

So you’ll have to content yourself with this one.

Others have preceeded me in the knowledge that Poisson regression with vce(robust) is a better alternative to log-linear regression. I direct you to Jeffery Wooldridge, Econometric Analysis of Cross Section and Panel Data, 2nd ed., chapter 18. Or see A. Colin Cameron and Pravin K. Trivedi, Microeconomics Using Stata, revised edition, chapter 17.3.2.

I first learned about this from a talk given by Austin Nichols, Regression for nonnegative skewed dependent variables, given in 2010 at the Stata Conference in Boston. That talk goes far beyond what I have presented here, and I heartily recommend it.

Multilevel random effects in xtmixed and sem — the long and wide of it

xtmixed was built from the ground up for dealing with multilevel random effects — that is its raison d’être. sem was built for multivariate outcomes, for handling latent variables, and for estimating structural equations (also called simultaneous systems or models with endogeneity). Can sem also handle multilevel random effects (REs)? Do we care?

This would be a short entry if either answer were “no”, so let’s get after the first question.

Can sem handle multilevel REs?

A good place to start is to simulate some multilevel RE data. Let’s create data for the 3-level regression model

where the classical multilevel regression assumption holds that

and

and  are distributed

are distributed  normal and are uncorrelated.

normal and are uncorrelated.

This represents a model of  nested within

nested within  nested within

nested within  . An example would be students nested within schools nested within counties. We have random intercepts at the 2nd and 3rd levels —

. An example would be students nested within schools nested within counties. We have random intercepts at the 2nd and 3rd levels —  ,

,  . Because these are random effects, we need estimate only the variance of

. Because these are random effects, we need estimate only the variance of  ,

,  , and

, and  .

.

For our simulated data, let’s assume there are 3 groups at the 3rd level, 2 groups at the 2nd level within each 3rd level group, and 2 individuals within each 2nd level group. Or,  ,

,  , and

, and  . Having only 3 groups at the 3rd level is silly. It gives us only 3 observations to estimate the variance of

. Having only 3 groups at the 3rd level is silly. It gives us only 3 observations to estimate the variance of  . But with only

. But with only  observations, we will be able to easily see our entire dataset, and the concepts scale to any number of 3rd-level groups.

observations, we will be able to easily see our entire dataset, and the concepts scale to any number of 3rd-level groups.

First, create our 3rd-level random effects —  .

.

. set obs 3

. gen k = _n

. gen Uk = rnormal()

There are only 3  in our dataset.

in our dataset.

I am showing the effects symbolically in the table rather than showing numeric values. It is the pattern of unique effects that will become interesting, not their actual values.

Now, create our 2nd-level random effects —  — by doubling this data and creating 2nd-level effects.

— by doubling this data and creating 2nd-level effects.

. expand 2

. by k, sort: gen j = _n

. gen Vjk = rnormal()

We have 6 unique values of our 2nd-level effects and the same 3 unique values of our 3rd-level effects. Our original 3rd-level effects just appear twice each.

Now, create our 1st-level random effects —  — which we typically just call errors.

— which we typically just call errors.

. expand 2

. by k j, sort: gen i = _n

. gen Eijk = rnormal()

There are still only 3 unique  in our dataset, and only 6 unique

in our dataset, and only 6 unique  .

.

Finally, we create our regression data, using  ,

,

. gen xijk = runiform()

. gen yijk = 2 * xijk + Uk + Vjk + Eijk

We could estimate our multilevel RE model on this data by typing,

. xtmixed yijk xijk || k: || j:

xtmixed uses the index variables k and j to deeply understand the multilevel structure of the our data. sem has no such understanding of multilevel data. What it does have is an understanding of multivariate data and a comfortable willingness to apply constraints.

Let’s restructure our data so that sem can be made to understand its multilevel structure.

First some renaming so that the results of our restructuring will be easier to interpret.

. rename Uk U

. rename Vjk V

. rename Eijk E

. rename xijk x

. rename yijk y

We reshape to turn our multilevel data into multivariate data that sem has a chance of understanding. First, we reshape wide on our 2nd-level identifier j. Before that, we egen to create a unique identifier for each observation of the two groups identified by j.

. egen ik = group(i k)

. reshape wide y x E V, i(ik) j(j)

We now have a y variable for each group in j (y1 and y2). Likewise, we have two x variables, two residuals, and most importantly two 2nd-level random effects V1 and V2. This is the same data, we have merely created a set of variables for every level of j. We have gone from multilevel to multivariate.

We still have a multilevel component. There are still two levels of i in our dataset. We must reshape wide again to remove any remnant of multilevel structure.

. drop ik

. reshape wide y* x* E*, i(k) j(i)

I admit that is a microscopic font, but it is the structure that is important, not the values. We now have 4 y’s, one for each combination of 2nd- and 3rd-level identifiers — i and j. Likewise for the x’s and E’s.

We can think of each xji yji pair of columns as representing a regression for a specific combination of j and i — y11 on x11, y12 on x12, y21 on x21, and y22 on x22. Or, more explicitly,

So, rather than a univariate multilevel regression with 4 nested observation sets, ( ) * (

) * ( ), we now have 4 regressions which are all related through

), we now have 4 regressions which are all related through  and each of two pairs are related through

and each of two pairs are related through  . Oh, and all share the same coefficient

. Oh, and all share the same coefficient  . Oh, and the

. Oh, and the  all have identical variances. Oh, and the

all have identical variances. Oh, and the  also have identical variances. Luckily both the sem command and the SEM Builder (the GUI for sem) make setting constraints easy.

also have identical variances. Luckily both the sem command and the SEM Builder (the GUI for sem) make setting constraints easy.

There is one other thing we haven’t addressed. xtmixed understands random effects. Does sem? Random effects are just unobserved (latent) variables and sem clearly understands those. So, yes, sem does understand random effects.

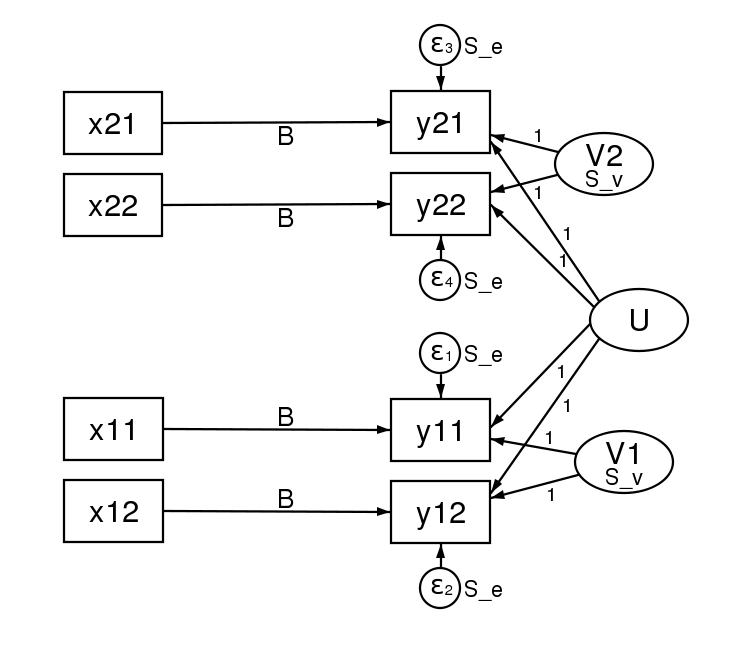

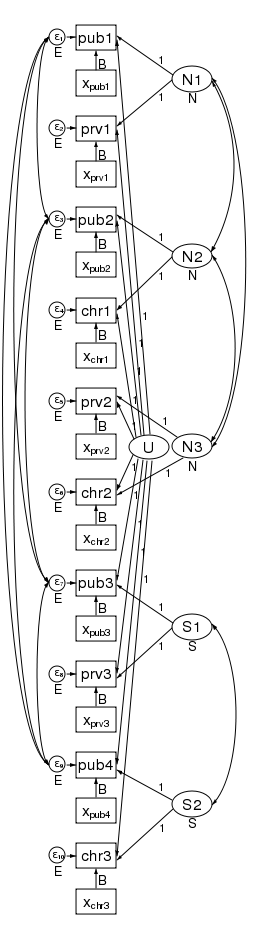

Many SEMers would represent this model in a path diagram by drawing.

There is a lot of information in that diagram. Each regression is represented by one of the x boxes being connected by a path to a y box. That each of the four paths is labeled with  means that we have constrained the regressions to have the same coefficient. The y21 and y22 boxes also receive input from the random latent variable V2 (representing our 2nd-level random effects). The other two y boxes receive input from V1 (also our 2nd-level random effects). For this to match how xtmixed handles random effects, V1 and V2 must be constrained to have the same variance. This was done in the path diagram by “locking” them to have the same variance — S_v. To match xtmixed, each of the four residuals must also have the same variance — shown in the diagram as S_e. The residuals and random effect variables also have their paths constrained to 1. That is to say, they do not have coefficients.

means that we have constrained the regressions to have the same coefficient. The y21 and y22 boxes also receive input from the random latent variable V2 (representing our 2nd-level random effects). The other two y boxes receive input from V1 (also our 2nd-level random effects). For this to match how xtmixed handles random effects, V1 and V2 must be constrained to have the same variance. This was done in the path diagram by “locking” them to have the same variance — S_v. To match xtmixed, each of the four residuals must also have the same variance — shown in the diagram as S_e. The residuals and random effect variables also have their paths constrained to 1. That is to say, they do not have coefficients.

We do not need any of the U, V, or E variables. We kept these only to make clear how the multilevel data was restructured to multivariate data. We might “follow the money” in a criminal investigation, but with simulated multilevel data is is best to “follow the effects”. Seeing how these effects were distributed in our reshaped data made it clear how they entered our multivariate model.

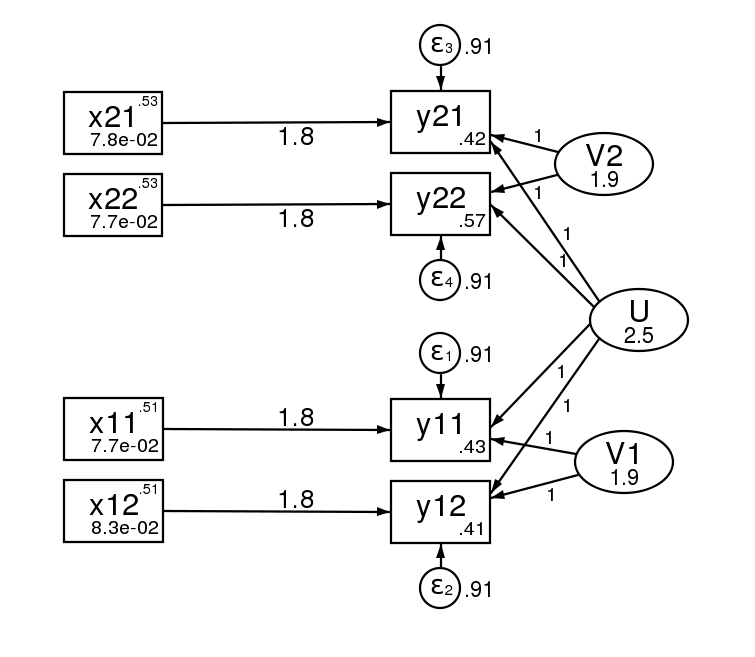

Just to prove that this all works, here are the results from a simulated dataset ( rather than the 3 that we have been using). The xtmixed results are,

rather than the 3 that we have been using). The xtmixed results are,

. xtmixed yijk xijk || k: || j: , mle var

(log omitted)

Mixed-effects ML regression Number of obs = 400

-----------------------------------------------------------

| No. of Observations per Group

Group Variable | Groups Minimum Average Maximum

----------------+------------------------------------------

k | 100 4 4.0 4

j | 200 2 2.0 2

-----------------------------------------------------------

Wald chi2(1) = 61.84

Log likelihood = -768.96733 Prob > chi2 = 0.0000

------------------------------------------------------------------------------

yijk | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

xijk | 1.792529 .2279392 7.86 0.000 1.345776 2.239282

_cons | .460124 .2242677 2.05 0.040 .0205673 .8996807

------------------------------------------------------------------------------

------------------------------------------------------------------------------

Random-effects Parameters | Estimate Std. Err. [95% Conf. Interval]

-----------------------------+------------------------------------------------

k: Identity |

var(_cons) | 2.469012 .5386108 1.610034 3.786268

-----------------------------+------------------------------------------------

j: Identity |

var(_cons) | 1.858889 .332251 1.309522 2.638725

-----------------------------+------------------------------------------------

var(Residual) | .9140237 .0915914 .7510369 1.112381

------------------------------------------------------------------------------

LR test vs. linear regression: chi2(2) = 259.16 Prob > chi2 = 0.0000

Note: LR test is conservative and provided only for reference.

The sem results are,

sem (y11 <- x11@bx _cons@c V1@1 U@1)

(y12 <- x12@bx _cons@c V1@1 U@1)

(y21 <- x21@bx _cons@c V2@1 U@1)

(y22 <- x22@bx _cons@c V2@1 U@1) ,

covstruct(_lexog, diagonal) cov(_lexog*_oexog@0)

cov( V1@S_v V2@S_v e.y11@S_e e.y12@S_e e.y21@S_e e.y22@S_e)

(notes omitted)

Endogenous variables

Observed: y11 y12 y21 y22

Exogenous variables

Observed: x11 x12 x21 x22

Latent: V1 U V2

(iteration log omitted)

Structural equation model Number of obs = 100

Estimation method = ml

Log likelihood = -826.63615

(constraint listing omitted)

------------------------------------------------------------------------------

| OIM | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

Structural |

y11 <- |

x11 | 1.792529 .2356323 7.61 0.000 1.330698 2.25436

V1 | 1 7.68e-17 1.3e+16 0.000 1 1

U | 1 2.22e-18 4.5e+17 0.000 1 1

_cons | .460124 .226404 2.03 0.042 .0163802 .9038677

-----------+----------------------------------------------------------------

y12 <- |

x12 | 1.792529 .2356323 7.61 0.000 1.330698 2.25436

V1 | 1 2.00e-22 5.0e+21 0.000 1 1

U | 1 5.03e-17 2.0e+16 0.000 1 1

_cons | .460124 .226404 2.03 0.042 .0163802 .9038677

-----------+----------------------------------------------------------------

y21 <- |

x21 | 1.792529 .2356323 7.61 0.000 1.330698 2.25436

U | 1 5.70e-46 1.8e+45 0.000 1 1

V2 | 1 5.06e-45 2.0e+44 0.000 1 1

_cons | .460124 .226404 2.03 0.042 .0163802 .9038677

-----------+----------------------------------------------------------------

y22 <- |

x22 | 1.792529 .2356323 7.61 0.000 1.330698 2.25436

U | 1 (constrained)

V2 | 1 (constrained)

_cons | .460124 .226404 2.03 0.042 .0163802 .9038677

-------------+----------------------------------------------------------------

Variance |

e.y11 | .9140239 .091602 .75102 1.112407

e.y12 | .9140239 .091602 .75102 1.112407

e.y21 | .9140239 .091602 .75102 1.112407

e.y22 | .9140239 .091602 .75102 1.112407

V1 | 1.858889 .3323379 1.309402 2.638967

U | 2.469011 .5386202 1.610021 3.786296

V2 | 1.858889 .3323379 1.309402 2.638967

-------------+----------------------------------------------------------------

Covariance |

x11 |

V1 | 0 (constrained)

U | 0 (constrained)

V2 | 0 (constrained)

-----------+----------------------------------------------------------------

x12 |

V1 | 0 (constrained)

U | 0 (constrained)

V2 | 0 (constrained)

-----------+----------------------------------------------------------------

x21 |

V1 | 0 (constrained)

U | 0 (constrained)

V2 | 0 (constrained)

-----------+----------------------------------------------------------------

x22 |

V1 | 0 (constrained)

U | 0 (constrained)

V2 | 0 (constrained)

-----------+----------------------------------------------------------------

V1 |

U | 0 (constrained)

V2 | 0 (constrained)

-----------+----------------------------------------------------------------

U |

V2 | 0 (constrained)

------------------------------------------------------------------------------

LR test of model vs. saturated: chi2(25) = 22.43, Prob > chi2 = 0.6110

And here is the path diagram after estimation.

The standard errors of the two estimation methods are asymptotically equivalent, but will differ in finite samples.

Sidenote: Those familiar with multilevel modeling will be wondering if sem can handle unbalanced data. That is to say a different number of observations or subgroups within groups. It can. Simply let reshape create missing values where it will and then add the method(mlmv) option to your sem command. mlmv stands for maximum likelihood with missing values. And, as strange as it may seem, with this option the multivariate sem representation and the multilevel xtmixed representations are the same.

Do we care?

You will have noticed that the sem command was, well, it was really long. (I wrote a little loop to get all the constraints right.) You will also have noticed that there is a lot of redundant output because our SEM model has so many constraints. Why would anyone go to all this trouble to do something that is so simple with xtmixed? The answer lies in all of those constraints. With sem we can relax any of those constraints we wish!

Relax the constraint that the V# have the same variance and you can introduce heteroskedasticity in the 2nd-level effects. That seems a little silly when there are only two levels, but imagine there were 10 levels.

Add a covariance between the V# and you introduce correlation between the groups in the 3rd level.

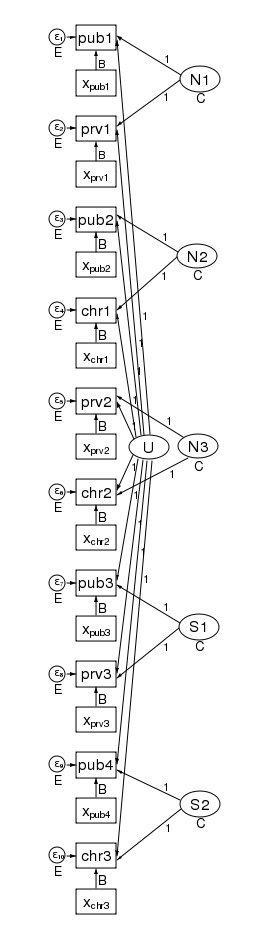

What’s more, the pattern of heteroskedasticity and correlation can be arbitrary. Here is our path diagram redrawn to represent children within schools within counties and increasing the number of groups in the 2nd level.

We have 5 counties at the 3rd level and two schools within each county at the 2nd level — for a total of 10 dimensions in our multivariate regression. The diagram does not change based on the number of children drawn from each school.

Our regression coefficients have been organized horizontally down the center of the diagram to allow room along the left and right for the random effects. Taken as a multilevel model, we have only a single covariate — x. Just to be clear, we could generalize this to multiple covariates by adding more boxes with covariates for each dependent variable in the diagram.

The labels are chosen carefully. The 3rd-level effects N1, N2, and N3 are for northern counties, and the remaining second level effects S1 and S2 are for southern counties. There is a separate dependent variable and associated error for each school. We have 4 public schools (pub1 pub2, pub3, and pub4); three private schools (prv1 prv2, and prv3); and 3 church-sponsored schools (chr1 chr2, and chr3).

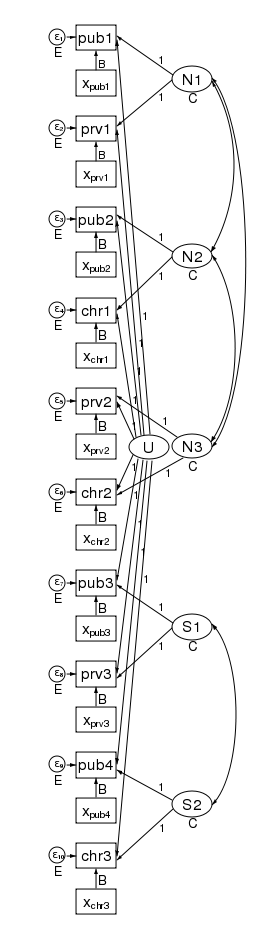

The multivariate structure seen in the diagram makes it clear that we can relax some constraints that the multilevel model imposes. Because the sem representation of the model breaks the 2nd level effect into an effect for each county, we can apply a structure to the 2nd level effect. Consider the path diagram below.

We have correlated the effects for the 3 northern counties. We did this by drawing curved lines between the effects. We have also correlated the effects of the two southern counties. xtmixed does not allow these types of correlations. Had we wished, we could have constrained the correlations of the 3 northern counties to be the same.

We could also have allowed the northern and southern counties to have different variances. We did just that in the diagram below by constraining the northern counties variances to be N and the southern counties variances to be S.

In this diagram we have also correlated the errors for the 4 public schools. As drawn, each correlation is free to take on its own values, but we could just as easily constrain each public school to be equally correlated with all other public schools. Likewise, to keep the diagram readable, we did not correlate the private schools with each other or the church schools with each other. We could have done that.

There is one thing that xtmixed can do that sem cannot. It can put a structure on the residual correlations within the 2nd level groups. xtmixed has a special option, residuals(), for just this purpose.

With xtmixed and sem you get,

- robust and cluster-robust SEs

- survey data

With sem you also get

- endogenous covariates

- estimation by GMM

- missing data — MAR (also called missing on observables)

- heteroskedastic effects at any level

- correlated effects at any level

- easy score tests using estat scoretests

- are the

![beta beta]() coefficients truly are the same across all equations/levels, whether effects?

coefficients truly are the same across all equations/levels, whether effects? - are effects or sets of effects uncorrelated?

- are effects within a grouping homoskedastic?

- …

- are the

Whether you view this rethinking of multilevel random-effects models as multivariate structural equation models (SEMs) as interesting, or merely an academic exercise, depends on whether your model calls for any of the items in the second list.

Building complicated expressions the easy way

Have you every wanted to make an “easy” calculation–say, after fitting a model–and gotten lost because you just weren’t sure where to find the degrees of freedom of the residual or the standard error of the coefficient? Have you ever been in the midst of constructing an “easy” calculation and was suddenly unsure just what e(df_r) really was? I have a solution.

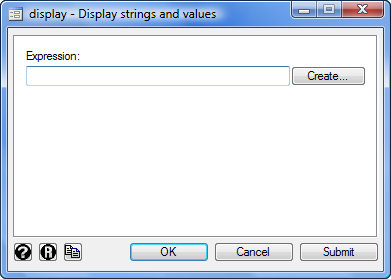

It’s called Stata’s expression builder. You can get to it from the display dialog (Data->Other Utilities->Hand Calculator)

In the dialog, click the Create button to bring up the builder. Really, it doesn’t look like much:

I want to show you how to use this expression builder; if you’ll stick with me, it’ll be worth your time.

Let’s start over again and assume you are in the midst of an analysis, say,

. sysuse auto, clear . regress price mpg length

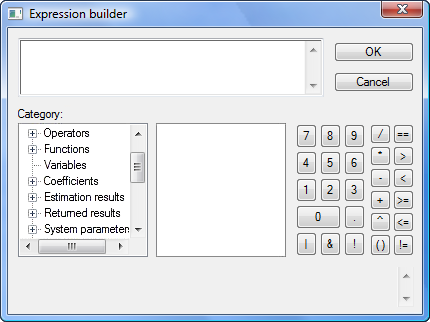

Next invoke the expression builder by pulling down the menu Data->Other Utilities->Hand Calculator. Click Create. It looks like this:

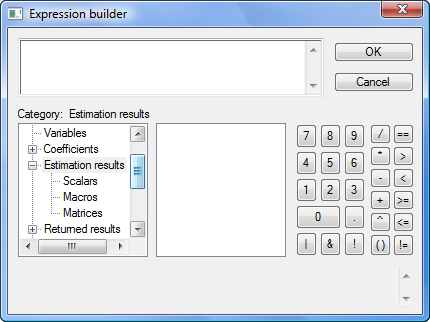

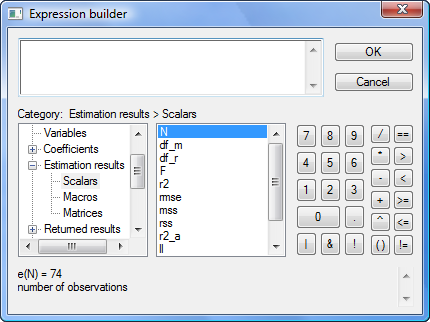

Now click on the tree node icon (+) in front of “Estimation results” and then scroll down to see what’s underneath. You’ll see

Click on Scalars:

The middle box now contains the scalars stored in e(). N happens to be highlighted, but you could click on any of the scalars. If you look below the two boxes, you see the value of the e() scalar selected as well as its value and a short description. e(N) is 74 and is the “number of observations”.

It works the same way for all the other categories in the box on the left: Operators, Functions, Variables, Coefficients, Estimation results, Returned results, System parameters, Matrices, Macros, Scalars, Notes, and Characteristics. You simply click on the tree node icon (+), and the category expands to show what is available.

You have now mastered the expression builder!

Let’s try it out.

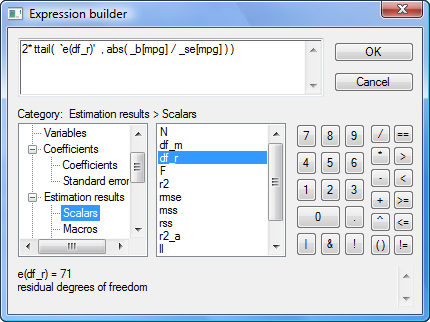

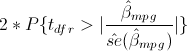

Say you want to verify that the p-value of the coefficient on mpg is correctly calculated by regress–which reports 0.052–or more likely, you want to verify that you know how it was calculated. You think the formula is

or, as an expression in Stata,

2*ttail(e(df_r), abs(_b[mpg]/_se[mpg]))

But I’m jumping ahead. You may not remember that _b[mpg] is the coefficient on variable mpg, or that _se[mpg] is its corresponding standard error, or that abs() is Stata’s absolute value function, or that e(df_r) is the residual degrees of freedom from the regression, or that ttail() is Stata’s Student’s t distribution function. We can build the above expression using the builder because all the components can be accessed through the builder. The ttail() and abs() functions are in the Functions category, the e(df_r) scalar is in the Estimation results category, and _b[mpg] and _se[mpg] are in the Coefficients category.

What’s nice about the builder is that not only are the item names listed but also a definition, syntax, and value are displayed when you click on an item. Having all this information in one place makes building a complex expression much easier.

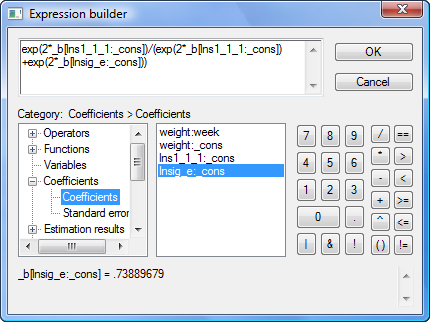

Another example of when the expression builder comes in handy is when computing intraclass correlations after xtmixed. Consider a simple two-level model from Example 1 in [XT] xtmixed, which models weight trajectories of 48 pigs from 9 successive weeks:

. use http://www.stata-press.com/data/r12/pig . xtmixed weight week || id:, variance

The intraclass correlation is a nonlinear function of variance components. In this example, the (residual) intraclass correlation is the ratio of the between-pig variance, var(_cons), to the total variance, between-pig variance plus residual (within-pig) variance, or var(_cons) + var(residual).

The xtmixed command does not store the estimates of variance components directly. Instead, it stores them as log standard deviations in e(b) such that _b[lns1_1_1:_cons] is the estimated log of between-pig standard deviation, and _b[lnsig_e:_cons] is the estimated log of residual (within-pig) standard deviation. So to compute the intraclass correlation, we must first transform log standard deviations to variances:

exp(2*_b[lns1_1_1:_cons])

exp(2*_b[lnsig_e:_cons])

The final expression for the intraclass correlation is then

exp(2*_b[lns1_1_1:_cons]) / (exp(2*_b[lns1_1_1:_cons])+exp(2*_b[lnsig_e:_cons]))

The problem is that few people remember that _b[lns1_1_1:_cons] is the estimated log of between-pig standard deviation. The few who do certainly do not want to type it. So use the expression builder as we do below:

In this case, we’re using the expression builder accessed from Stata’s nlcom dialog, which reports estimated nonlinear combinations along with their standard errors. Once we press OK here and in the nlcom dialog, we’ll see

. nlcom (exp(2*_b[lns1_1_1:_cons])/(exp(2*_b[lns1_1_1:_cons])+exp(2*_b[lnsig_e:_cons])))

_nl_1: exp(2*_b[lns1_1_1:_cons])/(exp(2*_b[lns1_1_1:_cons])+exp(2*_b[lnsig_e:_cons]))

------------------------------------------------------------------------------

weight | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

_nl_1 | .7717142 .0393959 19.59 0.000 .6944996 .8489288

------------------------------------------------------------------------------

The above could easily be extended to computing different types of intraclass correlations arising in higher-level random-effects models. The use of the expression builder for that becomes even more handy.

Comparing predictions after arima with manual computations

Some of our users have asked about the way predictions are computed after fitting their models with arima. Those users report that they cannot reproduce the complete set of forecasts manually when the model contains MA terms. They specifically refer that they are not able to get the exact values for the first few predicted periods. The reason for the difference between their manual results and the forecasts obtained with predict after arima is the way the starting values and the recursive predictions are computed. While Stata uses the Kalman filter to compute the forecasts based on the state space representation of the model, users reporting differences compute their forecasts with a different estimator that is based on the recursions derived from the ARIMA representation of the model. Both estimators are consistent but they produce slightly different results for the first few forecasting periods.

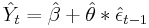

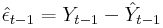

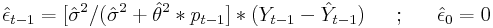

When using the postestimation command predict after fitting their MA(1) model with arima, some users claim that they should be able to reproduce the predictions with

where

However, the recursive formula for the Kalman filter prediction is based on the shrunk error (See section 13.3 in Hamilton (1993) for the complete derivation based on the state space representation):

where

: is the estimated variance of the white noise disturbance

: is the estimated variance of the white noise disturbance

: corresponds to the unconditional mean for the error term

: corresponds to the unconditional mean for the error term

Let’s use one of the datasets available from our website to fit a MA(1) model and compute the predictions based on the Kalman filter recursions formulated above:

** Predictions with Kalman Filter recursions (obtained with -predict- **

use http://www.stata-press.com/data/r12/lutkepohl, clear

arima dlinvestment, ma(1)

predict double yhat

** Coefficient estimates and sigma^2 from ereturn list **

scalar beta = _b[_cons]

scalar theta = [ARMA]_b[L1.ma]

scalar sigma2 = e(sigma)^2

** pt and shrinking factor for the first two observations**

generate double pt=sigma2 in 1/2

generate double sh_factor=(sigma2)/(sigma2+theta^2*pt) in 2

** Predicted series and errors for the first two observations **

generate double my_yhat = beta

generate double myehat = sh_factor*(dlinvestment - my_yhat) in 2

** Predictions with the Kalman filter recursions **

quietly {

forvalues i = 3/91 {

replace my_yhat = my_yhat + theta*l.myehat in `i'

replace pt= (sigma2*theta^2*L.pt)/(sigma2+theta^2*L.pt) in `i'

replace sh_factor=(sigma2)/(sigma2+theta^2*pt) in `i'

replace myehat=sh_factor*(dlinvestment - my_yhat) in `i'

}

}

List the first 10 predictions (yhat from predict and my_yhat from the manual computations):

. list qtr yhat my_yhat pt sh_factor in 1/10

+--------------------------------------------------------+

| qtr yhat my_yhat pt sh_factor |

|--------------------------------------------------------|

1. | 1960q1 .01686688 .01686688 .00192542 . |

2. | 1960q2 .01686688 .01686688 .00192542 .97272668 |

3. | 1960q3 .02052151 .02052151 .00005251 .99923589 |

4. | 1960q4 .01478403 .01478403 1.471e-06 .99997858 |

5. | 1961q1 .01312365 .01312365 4.125e-08 .9999994 |

|--------------------------------------------------------|

6. | 1961q2 .00326376 .00326376 1.157e-09 .99999998 |

7. | 1961q3 .02471242 .02471242 3.243e-11 1 |

8. | 1961q4 .01691061 .01691061 9.092e-13 1 |

9. | 1962q1 .01412974 .01412974 2.549e-14 1 |

10. | 1962q2 .00643301 .00643301 7.147e-16 1 |

+--------------------------------------------------------+

Notice that the shrinking factor (sh_factor) tends to 1 as t increases, which implies that after a few initial periods the predictions produced with the Kalman filter recursions become exactly the same as the ones produced by the formula at the top of this entry for the recursions derived from the ARIMA representation of the model.

Reference:

Hamilton, James. 1994. Time Series Analysis. Princeton University Press.

Using Stata’s SEM features to model the Beck Depression Inventory

I just got back from the 2012 Stata Conference in San Diego where I gave a talk on Psychometric Analysis Using Stata and from the 2012 American Psychological Association Meeting in Orlando. Stata’s structural equation modeling (SEM) builder was popular at both meetings and I wanted to show you how easy it is to use. If you are not familiar with the basics of SEM, please refer to the references at the end of the blog. My goal is simply to show you how to use the SEM builder assuming that you already know something about SEM. If you would like to view a video demonstration of the SEM builder, please click the play button below:

The data used here and for the silly examples in my talk were simulated to resemble one of the most commonly used measures of depression: the Beck Depression Inventory (BDI). If you find these data too silly or not relevant to your own research, you could instead imagine it being a set of questions to measure mathematical ability, the ability to use a statistical package, or whatever you wanted.

The Beck Depression Inventory

Originally published by Aaron Beck and colleagues in 1961, the BDI marked an important change in the conceptualization of depression from a psychoanalytic perspective to a cognitive/behavioral perspective. It was also a landmark in the measurement of depression shifting from lengthy, expensive interviews with a psychiatrist to a brief, inexpensive questionnaire that could be scored and quantified. The original inventory consisted of 21 questions each allowing ordinal responses of increasing symptom severity from 0-3. The sum of the responses could then be used to classify a respondent’s depressive symptoms as none, mild, moderate or severe. Many studies have demonstrated that the BDI has good psychometric properties such as high test-retest reliability and the scores correlate well with the assessments of psychiatrists and psychologists. The 21 questions can also be grouped into two subscales. The affective scale includes questions like “I feel sad” and “I feel like a failure” that quantify emotional symptoms of depression. The somatic or physical scale includes questions like “I have lost my appetite” and “I have trouble sleeping” that quantify physical symptoms of depression. Since its original publication, the BDI has undergone two revisions in response to the American Psychiatric Association’s (APA) Diagnostic and Statistical Manuals (DSM) and the BDI-II remains very popular.

The Stata Depression Inventory

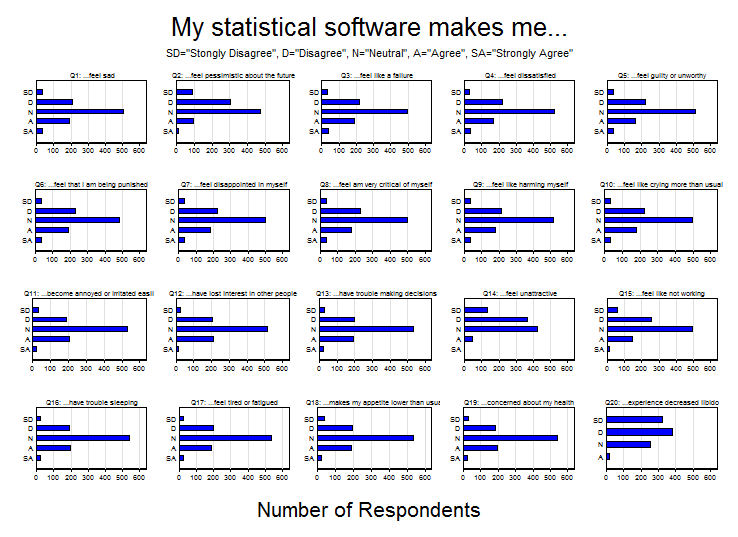

Since the BDI is a copyrighted psychometric instrument, I created a fictitious instrument called the “Stata Depression Inventory”. It consists of 20 questions each beginning with the phrase “My statistical software makes me…”. The individual questions are listed in the variable labels below.

. describe qu1-qu20 variable storage display value name type format label variable label ------------------------------------------------------------------------------ qu1 byte %16.0g response ...feel sad qu2 byte %16.0g response ...feel pessimistic about the future qu3 byte %16.0g response ...feel like a failure qu4 byte %16.0g response ...feel dissatisfied qu5 byte %16.0g response ...feel guilty or unworthy qu6 byte %16.0g response ...feel that I am being punished qu7 byte %16.0g response ...feel disappointed in myself qu8 byte %16.0g response ...feel am very critical of myself qu9 byte %16.0g response ...feel like harming myself qu10 byte %16.0g response ...feel like crying more than usual qu11 byte %16.0g response ...become annoyed or irritated easily qu12 byte %16.0g response ...have lost interest in other people qu13 byte %16.0g qu13_t1 ...have trouble making decisions qu14 byte %16.0g qu14_t1 ...feel unattractive qu15 byte %16.0g qu15_t1 ...feel like not working qu16 byte %16.0g qu16_t1 ...have trouble sleeping qu17 byte %16.0g qu17_t1 ...feel tired or fatigued qu18 byte %16.0g qu18_t1 ...makes my appetite lower than usual qu19 byte %16.0g qu19_t1 ...concerned about my health qu20 byte %16.0g qu20_t1 ...experience decreased libido

The responses consist of a 5-point Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree). Questions 1-10 form the affective scale of the inventory and questions 11-20 form the physical scale. Data were simulated for 1000 imaginary people and included demographic variables such as age, sex and race. The responses can be summarized succinctly in a matrix of bar graphs:

Classical statistical analysis

The beginning of a classical statistical analysis of these data might consist of summing the responses for questions 1-10 and referring to them as the “Affective Depression Score” and summing questions 11-20 and referring to them as the “Physical Depression Score”.

egen Affective = rowtotal(qu1-qu10) label var Affective "Affective Depression Score" egen physical = rowtotal(qu11-qu20) label var physical "Physical Depression Score"

We could be more sophisticated and use principal components to create the affective and physical depression score:

pca qu1-qu20, components(2) predict Affective Physical label var Affective "Affective Depression Score" label var Physical "Physical Depression Score"

We could then ask questions such as “Are there differences in affective and physical depression scores by sex?” and test these hypotheses using multivariate statistics such as Hotelling’s T-squared statistic. The problem with this analysis strategy is that it treats the depression scores as though they were measured without error and can lead to inaccurate p-values for our test statistics.

Structural equation modeling

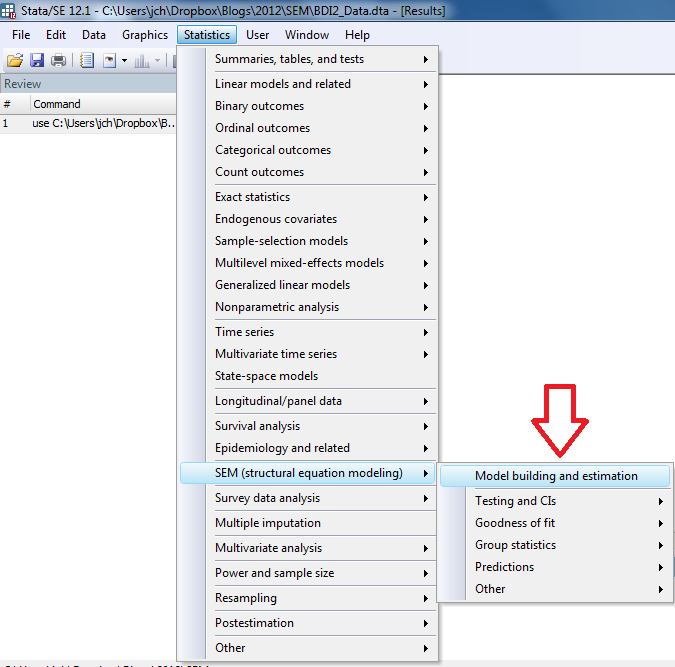

Structural equation modeling (SEM) is an ideal way to analyze data where the outcome of interest is a scale or scales derived from a set of measured variables. The affective and physical scores are treated as latent variables in the model resulting in accurate p-values and, best of all….these models are very easy to fit using Stata! We begin by selecting the SEM builder from the Statistics menu:

In the SEM builder, we can select the “Add Measurement Component” icon:

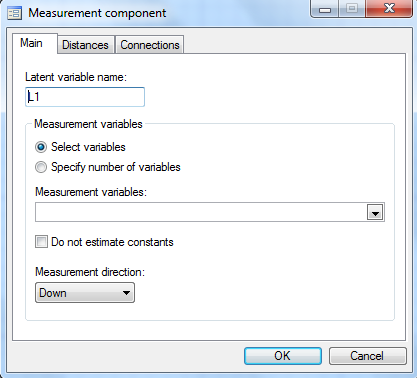

which will open the following dialog box:

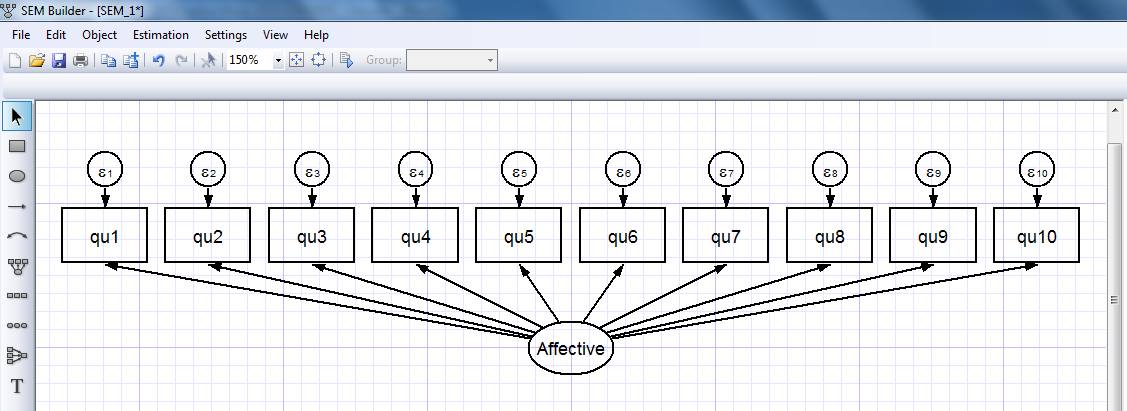

In the box labeled “Latent Variable Name” we can type “Affective” (red arrow below) and we can select the variables qu1-qu10 in the “Measured variables” box (blue arrow below).

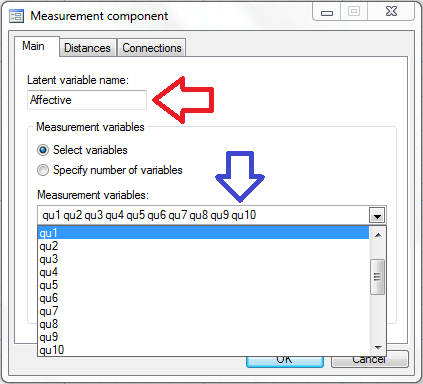

When we click “OK”, the affective measurement component appears in the builder:

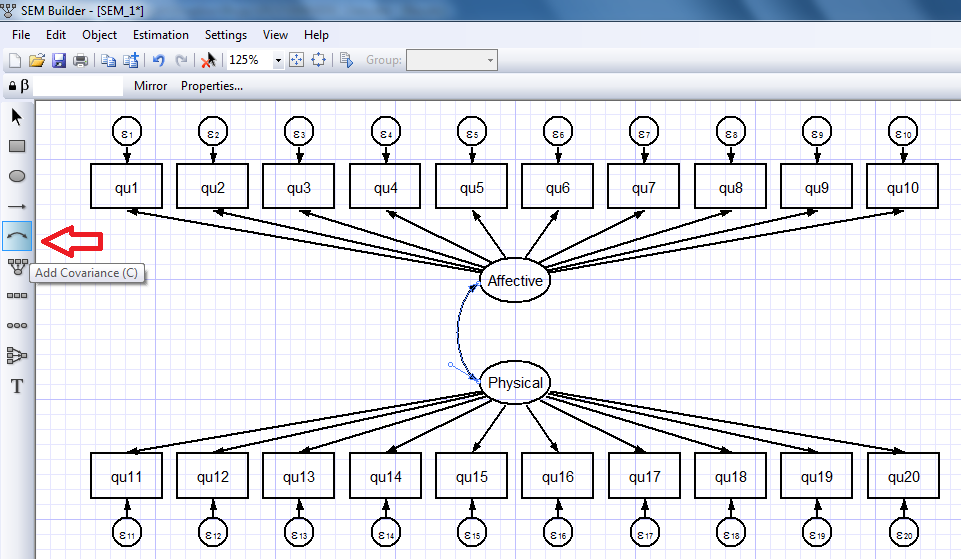

We can repeat this process to create a measurement component for our physical depression scale (images not shown). We can also allow for covariance/correlation between our affective and physical depression scales using the “Add Covariance” icon on the toolbar (red arrow below).

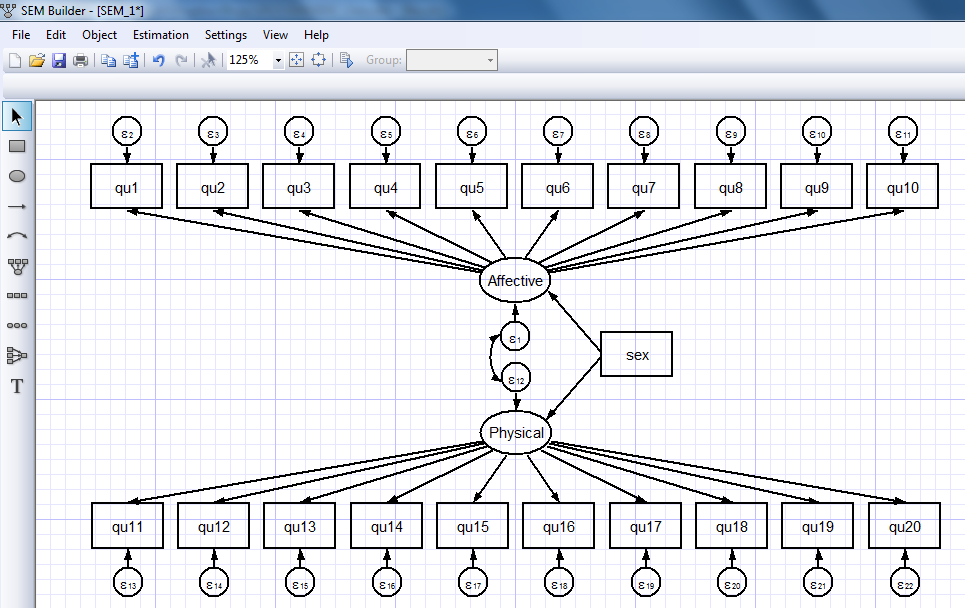

I’ll omit the intermediate steps to build the full model shown below but it’s easy to use the “Add Observed Variable” and “Add Path” icons to create the full model:

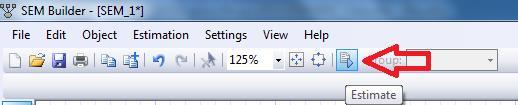

Now we’re ready to estimate the parameters for our model. To do this, we click the “Estimate” icon on the toolbar (duh!):

And the flowing dialog box appears:

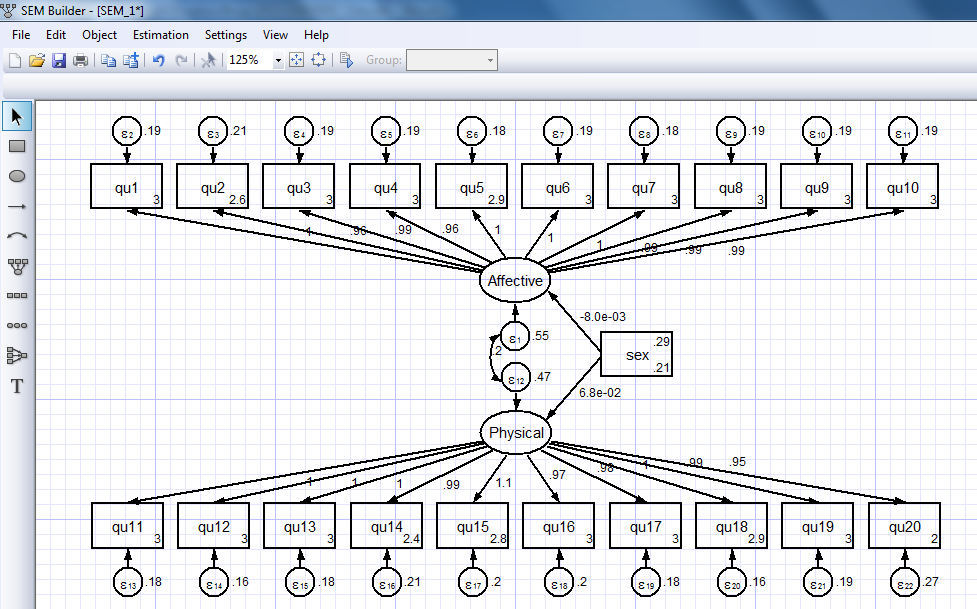

Let’s ignore the estimation options for now and use the default settings. Click “OK” and the parameter estimates will appear in the diagram:

Some of the parameter estimates are difficult to read in this form but it is easy to rearrange the placement and formatting of the estimates to make them easier to read.

If we look at Stata’s output window and scroll up, you’ll notice that the SEM Builder automatically generated the command for our model:

sem (Affective -> qu1) (Affective -> qu2) (Affective -> qu3)

(Affective -> qu4) (Affective -> qu5) (Affective -> qu6)

(Affective -> qu7) (Affective -> qu8) (Affective -> qu9)

(Affective -> qu10) (Physical -> qu11) (Physical -> qu12)

(Physical -> qu13) (Physical -> qu14) (Physical -> qu15)

(Physical -> qu16) (Physical -> qu17) (Physical -> qu18)

(Physical -> qu19) (Physical -> qu20) (sex -> Affective)

(sex -> Physical), latent(Affective Physical) cov(e.Physical*e.Affective)

We can gather terms and abbreviate some things to make the command much easier to read:

sem (Affective -> qu1-qu10) ///

(Physical -> qu11-qu20) ///

(sex -> Affective Physical) ///

, latent(Affective Physical ) ///

cov( e.Physical*e.Affective)

We could then calculate a Wald statistic to test the null hypothesis that there is no association between sex and our affective and physical depression scales.

test sex

( 1) [Affective]sex = 0

( 2) [Physical]sex = 0

chi2( 2) = 2.51

Prob > chi2 = 0.2854

Final thoughts

This is an admittedly oversimplified example – we haven’t considered the fit of the model or considered any alternative models. We have only included one dichotomous independent variable. We might prefer to use a likelihood ratio test or a score test. Those are all very important issues and should not be ignored in a proper data analysis. But my goal was to demonstrate how easy it is to use Stata’s SEM builder to model data such as those arising from the Beck Depression Inventory. Incidentally, if these data were collected using a complex survey design, it would not be difficult to incorporate the sampling structure and sample weights into the analysis. Missing data can be handled easily as well using Full Information Maximum Likelihood (FIML) but those are topics for another day.

If you would like view the slides from my talk, download the data used in this example or view a video demonstration of Stata’s SEM builder using these data, please use the links below. For the dataset, you can also type use followed by the URL for the data to load it directly into Stata.

Slides:

http://stata.com/meeting/sandiego12/materials/sd12_huber.pdf

Data:

http://stata.com/meeting/sandiego12/materials/Huber_2012SanDiego.dta

YouTube video demonstration:

http://www.youtube.com/watch?v=Xj0gBlqwYHI

References

Beck AT, Ward CH, Mendelson M, Mock J, Erbaugh J (June 1961). An inventory for measuring depression. Arch. Gen. Psychiatry 4 (6): 561–71.

Beck AT, Ward C, Mendelson M (1961). Beck Depression Inventory (BDI). Arch Gen Psychiatry 4 (6): 561–571

Beck AT, Steer RA, Ball R, Ranieri W (December 1996). Comparison of Beck Depression Inventories -IA and -II in psychiatric outpatients. Journal of Personality Assessment 67 (3): 588–97

Bollen, KA. (1989). Structural Equations With Latent Variables. New York, NY: John Wiley and Sons

Kline, RB (2011). Principles and Practice of Structural Equation Modeling. New York, NY: Guilford Press

Raykov, T & Marcoulides, GA (2006). A First Course in Structural Equation Modeling. Mahwah, NJ: Lawrence Erlbaum

Schumacker, RE & Lomax, RG (2012) A Beginner’s Guide to Structural Equation Modeling, 3rd Ed. New York, NY: Routledge

Multilevel linear models in Stata, part 1: Components of variance

In the last 15-20 years multilevel modeling has evolved from a specialty area of statistical research into a standard analytical tool used by many applied researchers.

Stata has a lot of multilevel modeling capababilities.

I want to show you how easy it is to fit multilevel models in Stata. Along the way, we’ll unavoidably introduce some of the jargon of multilevel modeling.

I’m going to focus on concepts and ignore many of the details that would be part of a formal data analysis. I’ll give you some suggestions for learning more at the end of the blog.

- The videos

Stata has a friendly dialog box that can assist you in building multilevel models. If you would like a brief introduction using the GUI, you can watch a demonstration on Stata’s YouTube Channel:

Introduction to multilevel linear models in Stata, part 1: The xtmixed command

- Multilevel data

Multilevel data are characterized by a hierarchical structure. A classic example is children nested within classrooms and classrooms nested within schools. The test scores of students within the same classroom may be correlated due to exposure to the same teacher or textbook. Likewise, the average test scores of classes might be correlated within a school due to the similar socioeconomic level of the students.

You may have run across datasets with these kinds of structures in your own work. For our example, I would like to use a dataset that has both longitudinal and classical hierarchical features. You can access this dataset from within Stata by typing the following command:

use http://www.stata-press.com/data/r12/productivity.dta

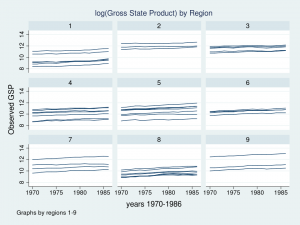

We are going to build a model of gross state product for 48 states in the USA measured annually from 1970 to 1986. The states have been grouped into nine regions based on their economic similarity. For distributional reasons, we will be modeling the logarithm of annual Gross State Product (GSP) but in the interest of readability, I will simply refer to the dependent variable as GSP.

. describe gsp year state region

storage display value

variable name type format label variable label

-----------------------------------------------------------------------------

gsp float %9.0g log(gross state product)

year int %9.0g years 1970-1986

state byte %9.0g states 1-48

region byte %9.0g regions 1-9

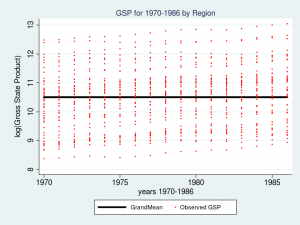

Let’s look at a graph of these data to see what we’re working with.

twoway (line gsp year, connect(ascending)), ///

by(region, title("log(Gross State Product) by Region", size(medsmall)))

Each line represents the trajectory of a state’s (log) GSP over the years 1970 to 1986. The first thing I notice is that the groups of lines are different in each of the nine regions. Some groups of lines seem higher and some groups seem lower. The second thing that I notice is that the slopes of the lines are not the same. I’d like to incorporate those attributes of the data into my model.

- Components of variance

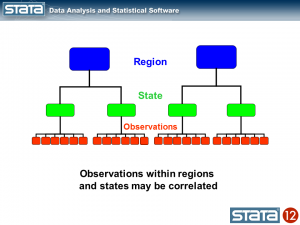

Let’s tackle the vertical differences in the groups of lines first. If we think about the hierarchical structure of these data, I have repeated observations nested within states which are in turn nested within regions. I used color to keep track of the data hierarchy.

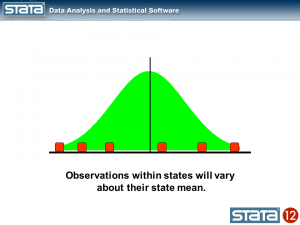

We could compute the mean GSP within each state and note that the observations within in each state vary about their state mean.

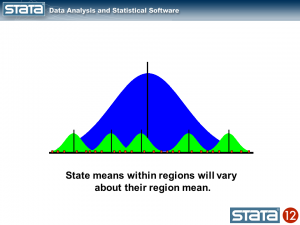

Likewise, we could compute the mean GSP within each region and note that the state means vary about their regional mean.

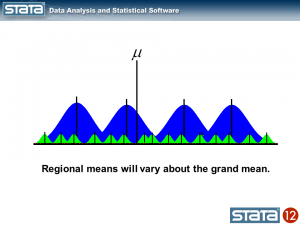

We could also compute a grand mean and note that the regional means vary about the grand mean.

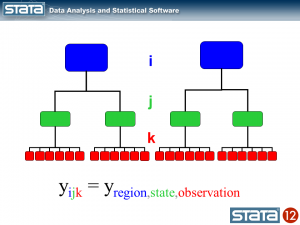

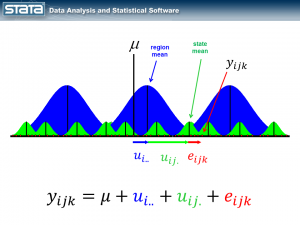

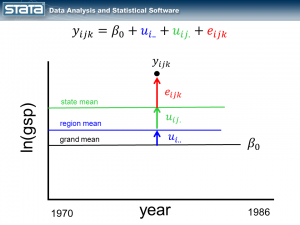

Next, let’s introduce some notation to help us keep track of our mutlilevel structure. In the jargon of multilevel modelling, the repeated measurements of GSP are described as “level 1″, the states are referred to as “level 2″ and the regions are “level 3″. I can add a three-part subscript to each observation to keep track of its place in the hierarchy.

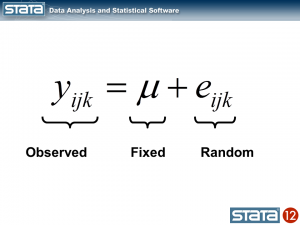

Now let’s think about our model. The simplest regression model is the intercept-only model which is equivalent to the sample mean. The sample mean is the “fixed” part of the model and the difference between the observation and the mean is the residual or “random” part of the model. Econometricians often prefer the term “disturbance”. I’m going to use the symbol μ to denote the fixed part of the model. μ could represent something as simple as the sample mean or it could represent a collection of independent variables and their parameters.

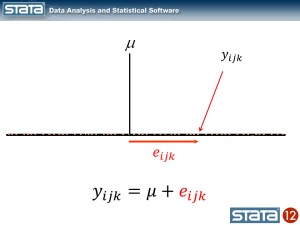

Each observation can then be described in terms of its deviation from the fixed part of the model.

If we computed this deviation of each observation, we could estimate the variability of those deviations. Let’s try that for our data using Stata’s xtmixed command to fit the model:

. xtmixed gsp

Mixed-effects ML regression Number of obs = 816

Wald chi2(0) = .

Log likelihood = -1174.4175 Prob > chi2 = .

------------------------------------------------------------------------------

gsp | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

_cons | 10.50885 .0357249 294.16 0.000 10.43883 10.57887

------------------------------------------------------------------------------

------------------------------------------------------------------------------

Random-effects Parameters | Estimate Std. Err. [95% Conf. Interval]

-----------------------------+------------------------------------------------

sd(Residual) | 1.020506 .0252613 .9721766 1.071238

------------------------------------------------------------------------------

The top table in the output shows the fixed part of the model which looks like any other regression output from Stata, and the bottom table displays the random part of the model. Let’s look at a graph of our model along with the raw data and interpret our results.

predict GrandMean, xb

label var GrandMean "GrandMean"

twoway (line GrandMean year, lcolor(black) lwidth(thick)) ///

(scatter gsp year, mcolor(red) msize(tiny)), ///

ytitle(log(Gross State Product), margin(medsmall)) ///

legend(cols(4) size(small)) ///

title("GSP for 1970-1986 by Region", size(medsmall))

The thick black line in the center of the graph is the estimate of _cons, which is an estimate of the fixed part of model for GSP. In this simple model, _cons is the sample mean which is equal to 10.51. In “Random-effects Parameters” section of the output, sd(Residual) is the average vertical distance between each observation (the red dots) and fixed part of the model (the black line). In this model, sd(Residual) is the estimate of the sample standard deviation which equals 1.02.

At this point you may be thinking to yourself – “That’s not very interesting – I could have done that with Stata’s summarize command”. And you would be correct.

. summ gsp

Variable | Obs Mean Std. Dev. Min Max

-------------+--------------------------------------------------------

gsp | 816 10.50885 1.021132 8.37885 13.04882

But here’s where it does become interesting. Let’s make the random part of the model more complex to account for the hierarchical structure of the data. Consider a single observation, yijk and take another look at its residual.

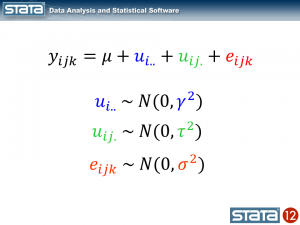

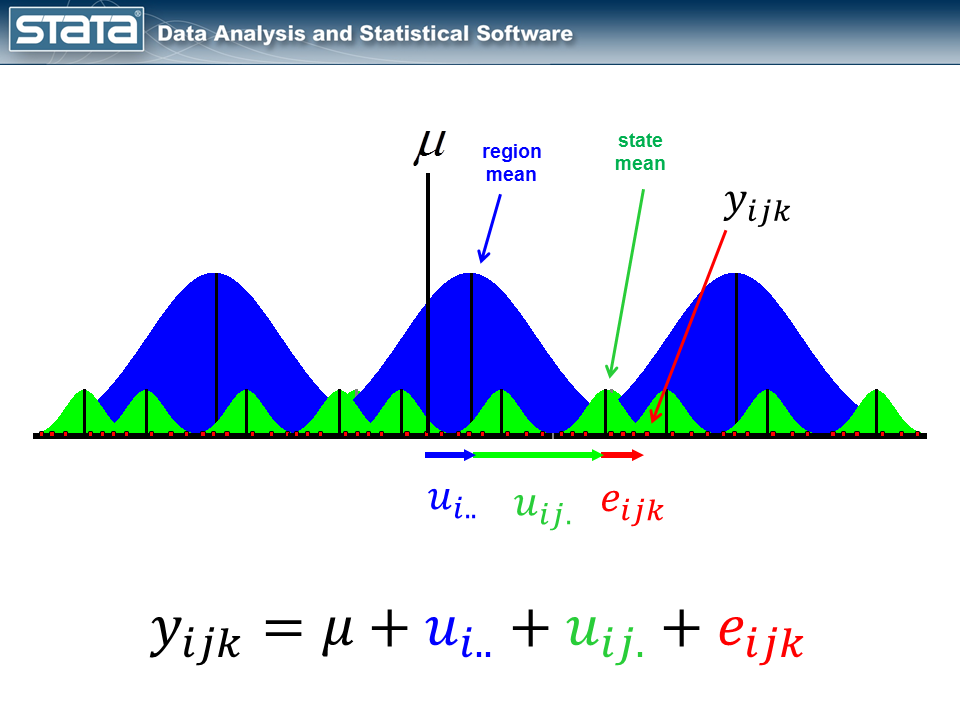

The observation deviates from its state mean by an amount that we will denote eijk. The observation’s state mean deviates from the the regionals mean uij. and the observation’s regional mean deviates from the fixed part of the model, μ, by an amount that we will denote ui... We have partitioned the observation’s residual into three parts, aka “components”, that describe its magnitude relative to the state, region and grand means. If we calculated this set of residuals for each observation, wecould estimate the variability of those residuals and make distributional assumptions about them.

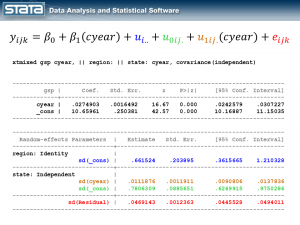

These kinds of models are often called “variance component” models because they estimate the variability accounted for by each level of the hierarchy. We can estimate a variance component model for GSP using Stata’s xtmixed command:

xtmixed gsp, || region: || state:

------------------------------------------------------------------------------

gsp | Coef. Std. Err. z P>|z| [95% Conf. Interval]

-------------+----------------------------------------------------------------

_cons | 10.65961 .2503806 42.57 0.000 10.16887 11.15035

------------------------------------------------------------------------------

------------------------------------------------------------------------------

Random-effects Parameters | Estimate Std. Err. [95% Conf. Interval]

-----------------------------+------------------------------------------------

region: Identity |

sd(_cons) | .6615227 .2038944 .361566 1.210325

-----------------------------+------------------------------------------------

state: Identity |

sd(_cons) | .7797837 .0886614 .6240114 .9744415

-----------------------------+------------------------------------------------

sd(Residual) | .1570457 .0040071 .149385 .1650992

------------------------------------------------------------------------------

The fixed part of the model, _cons, is still the sample mean. But now there are three parameters estimates in the bottom table labeled “Random-effects Parameters”. Each quantifies the average deviation at each level of the hierarchy.

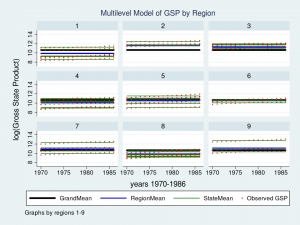

Let’s graph the predictions from our model and see how well they fit the data.

predict GrandMean, xb

label var GrandMean "GrandMean"

predict RegionEffect, reffects level(region)

predict StateEffect, reffects level(state)

gen RegionMean = GrandMean + RegionEffect

gen StateMean = GrandMean + RegionEffect + StateEffect

twoway (line GrandMean year, lcolor(black) lwidth(thick)) ///

(line RegionMean year, lcolor(blue) lwidth(medthick)) ///

(line StateMean year, lcolor(green) connect(ascending)) ///

(scatter gsp year, mcolor(red) msize(tiny)), ///

ytitle(log(Gross State Product), margin(medsmall)) ///

legend(cols(4) size(small)) ///

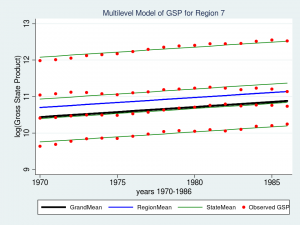

by(region, title("Multilevel Model of GSP by Region", size(medsmall)))

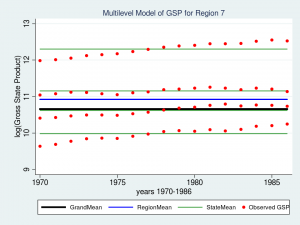

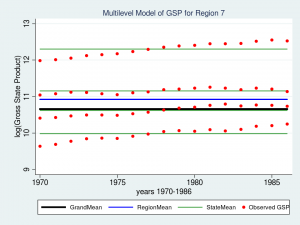

Wow – that’s a nice graph if I do say so myself. It would be impressive for a report or publication, but it’s a little tough to read with all nine regions displayed at once. Let’s take a closer look at Region 7 instead.

twoway (line GrandMean year, lcolor(black) lwidth(thick)) ///

(line RegionMean year, lcolor(blue) lwidth(medthick)) ///

(line StateMean year, lcolor(green) connect(ascending)) ///

(scatter gsp year, mcolor(red) msize(medsmall)) ///

if region ==7, ///

ytitle(log(Gross State Product), margin(medsmall)) ///

legend(cols(4) size(small)) ///

title("Multilevel Model of GSP for Region 7", size(medsmall))

The red dots are the observations of GSP for each state within Region 7. The green lines are the estimated mean GSP within each State and the blue line is the estimated mean GSP within Region 7. The thick black line in the center is the overall grand mean for all nine regions. The model appears to fit the data fairly well but I can’t help noticing that the red dots seem to have an upward slant to them. Our model predicts that GSP is constant within each state and region from 1970 to 1986 when clearly the data show an upward trend.

So we’ve tackled the first feature of our data. We’ve succesfully incorporated the basic hierarchical structure into our model by fitting a variance componentis using Stata’s xtmixed command. But our graph tells us that we aren’t finished yet.

Next time we’ll tackle the second feature of our data — the longitudinal nature of the observations.

- For more information

If you’d like to learn more about modelling multilevel and longitudinal data, check out

Multilevel and Longitudinal Modeling Using Stata, Third Edition

Volume I: Continuous Responses

Volume II: Categorical Responses, Counts, and Survival

by Sophia Rabe-Hesketh and Anders Skrondal

or sign up for our popular public training course “Multilevel/Mixed Models Using Stata“.

There’s a course coming up in Washington, DC on February 7-8, 2013.

Multilevel linear models in Stata, part 2: Longitudinal data

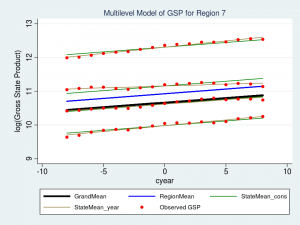

In my last posting, I introduced you to the concepts of hierarchical or “multilevel” data. In today’s blog, I’d like to show you how to use multilevel modeling techniques to analyse longitudinal data with Stata’s xtmixed command.

Last time, we noticed that our data had two features. First, we noticed that the means within each level of the hierarchy were different from each other and we incorporated that into our data analysis by fitting a “variance component” model using Stata’s xtmixed command.

The second feature that we noticed is that repeated measurement of GSP showed an upward trend. We’ll pick up where we left off last time and stick to the concepts again and you can refer to the references at the end to learn more about the details.

The videos

Stata has a very friendly dialog box that can assist you in building multilevel models. If you would like a brief introduction using the GUI, you can watch a demonstration on Stata’s YouTube Channel:

Introduction to multilevel linear models in Stata, part 2: Longitudinal data

Longitudinal data

I’m often asked by beginning data analysts – “What’s the difference between longitudinal data and time-series data? Aren’t they the same thing?”.

The confusion is understandable — both types of data involve some measurement of time. But the answer is no, they are not the same thing.

Univariate time series data typically arise from the collection of many data points over time from a single source, such as from a person, country, financial instrument, etc.

Longitudinal data typically arise from collecting a few observations over time from many sources, such as a few blood pressure measurements from many people.

There are some multivariate time series that blur this distinction but a rule of thumb for distinguishing between the two is that time series have more repeated observations than subjects while longitudinal data have more subjects than repeated observations.

Because our GSP data from last time involve 17 measurements from 48 states (more sources than measurements), we will treat them as longitudinal data.

GSP Data: http://www.stata-press.com/data/r12/productivity.dta

Random intercept models

As I mentioned last time, repeated observations on a group of individuals can be conceptualized as multilevel data and modeled just as any other multilevel data. We left off last time with a variance component model for GSP (Gross State Product, logged) and noted that our model assumed a constant GSP over time while the data showed a clear upward trend.

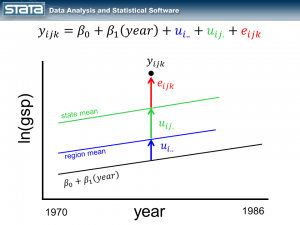

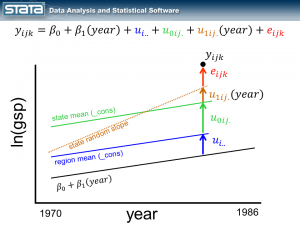

If we consider a single observation and think about our model, nothing in the fixed or random part of the models is a function of time.

Let’s begin by adding the variable year to the fixed part of our model.

As we expected, our grand mean has become a linear regression which more accurately reflects the change over time in GSP. What might be unexpected is that each state’s and region’s mean has changed as well and now has the same slope as the regression line. This is because none of the random components of our model are a function of time. Let’s fit this model with the xtmixed command:

. xtmixed gsp year, || region: || state:

------------------------------------------------------------------------------

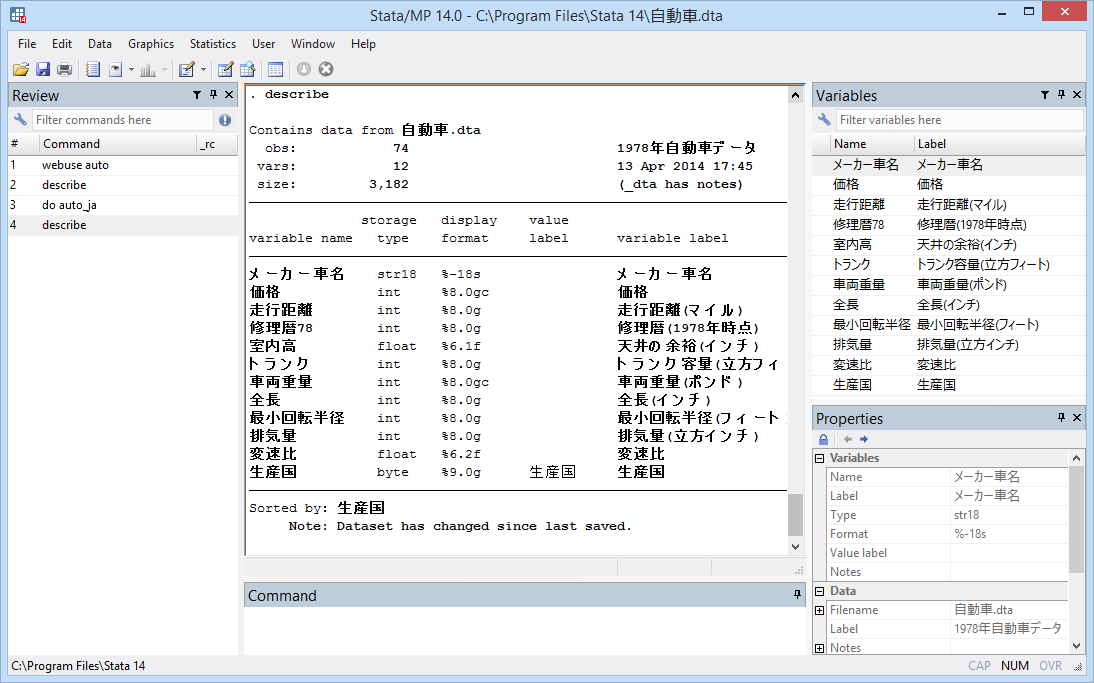

gsp | Coef. Std. Err. z P>|z| [95% Conf. Interval]